이번 포스트에서는 PyTorch를 이용해 간단한 CNN 모델을 구축하고, 이를 통해 사자와 호랑이 이미지를 분류하는 방법을 알아보겠습니다. 데이터셋 로드부터 모델 학습, 평가까지의 전체 과정을 자세히 설명하겠습니다.

< 목차 >

1. 데이터셋 준비

2. 데이터셋 클래스 정의

3. 데이터 변환 설정

4. 데이터셋 분할 및 데이터 로더 생성

5. 간단한 CNN 모델 정의

6. 모델 학습 및 검증

7. 테스트 데이터 평가

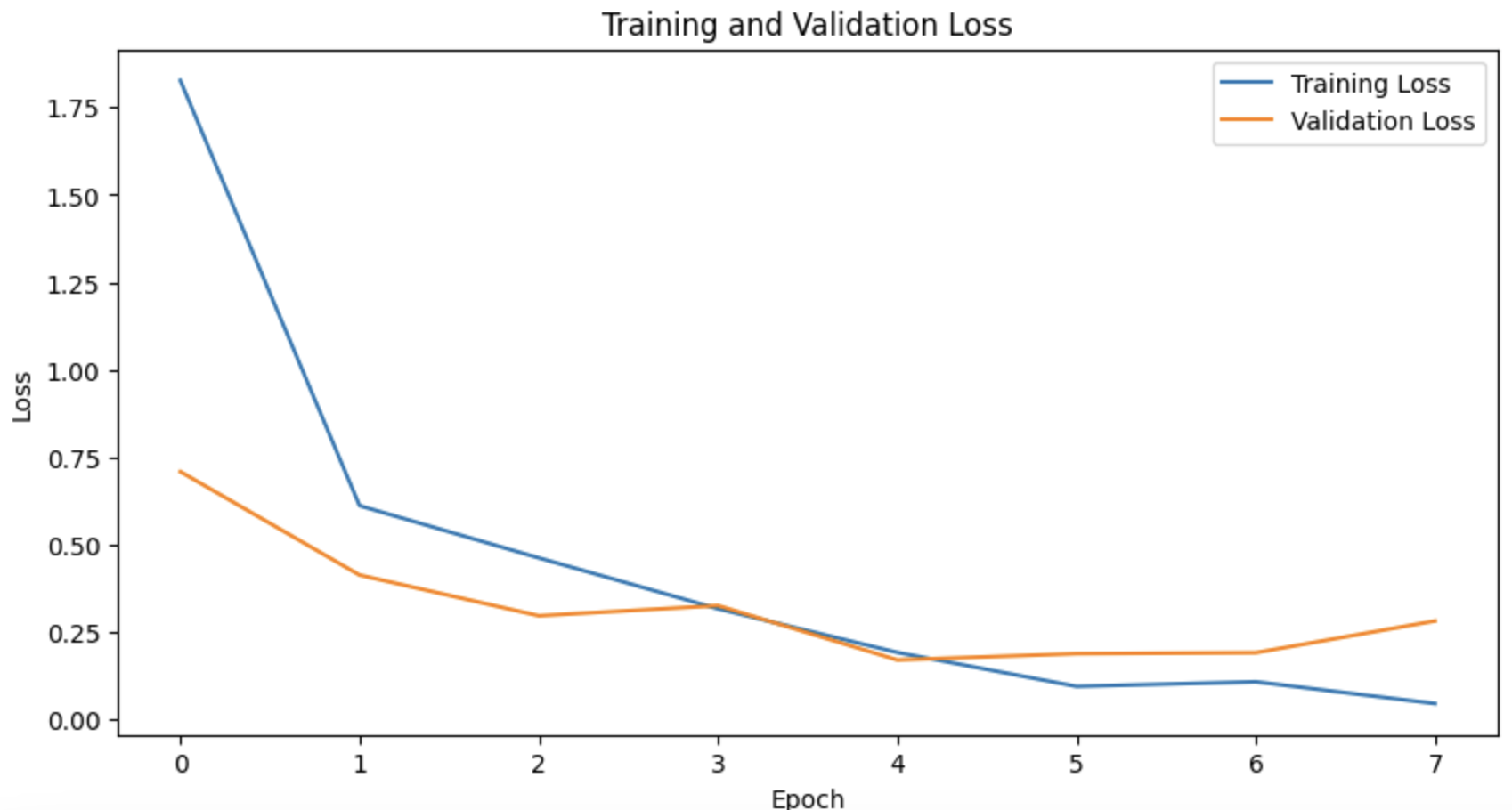

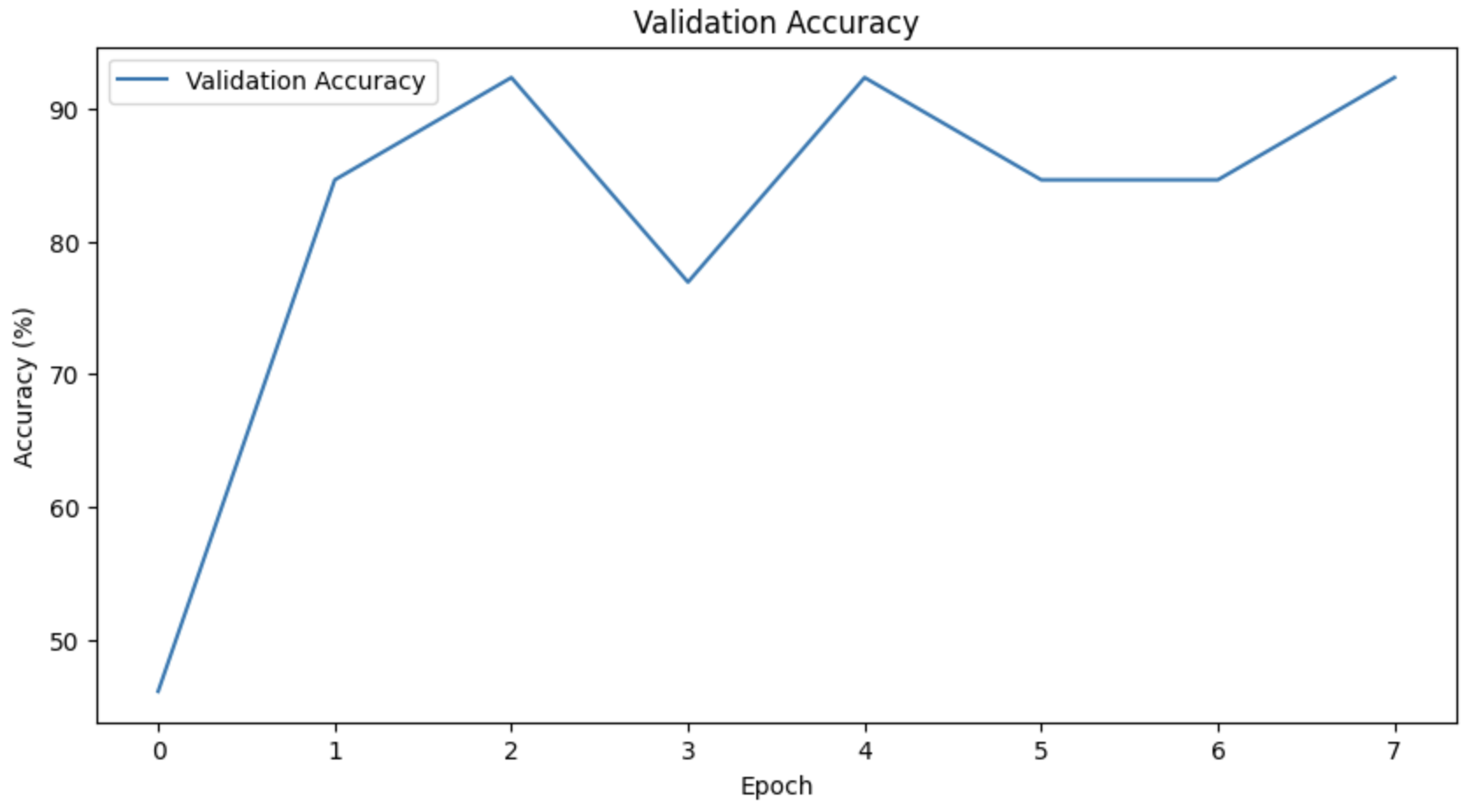

8. 학습 및 검증 손실, 정확도 시각화

9. 테스트 결과 시각화

10. 결과

1. 데이터셋 준비

먼저, 이미지 경로를 불러오고, 각 이미지를 레이블링합니다. 사자는 0, 호랑이는 1로 라벨을 지정합니다.

import glob

lion_image_paths = glob.glob('/mnt/lion/*.jpg')

tiger_image_paths = glob.glob('/mnt/tiger/*.jpg')

image_paths = lion_image_paths + tiger_image_paths

labels = [0] * len(lion_image_paths) + [1] * len(tiger_image_paths) # 0: 사자, 1: 호랑이

print(f'Total images: {len(image_paths)}, Total labels: {len(labels)}')

2. 데이터셋 클래스 정의

PyTorch의 Dataset 클래스를 상속받아 커스텀 데이터셋 클래스를 정의합니다. 이미지 변환(transform)은 학습과 테스트 단계에서 다르게 설정합니다.

from torchvision import transforms

from torch.utils.data import Dataset

from PIL import Image

class CustomDataset(Dataset):

def __init__(self, image_paths, labels, transform=None):

self.image_paths = image_paths

self.labels = labels

self.transform = transform

def __len__(self):

return len(self.image_paths)

def __getitem__(self, idx):

img_path = self.image_paths[idx]

image = Image.open(img_path).convert('RGB')

if self.transform:

image = self.transform(image)

label = self.labels[idx]

return image, label

3. 데이터 변환 설정

학습 데이터는 다양한 이미지 증강 기법을 사용해 데이터셋을 풍부하게 하고, 테스트 데이터는 기본적인 크기 조정만 수행합니다.

train_transform = transforms.Compose([

transforms.RandomResizedCrop(224, scale=(0.8, 1.0)),

transforms.RandomHorizontalFlip(),

transforms.RandomRotation(20),

transforms.ColorJitter(brightness=0.3, contrast=0.3, saturation=0.3, hue=0.2),

transforms.RandomGrayscale(p=0.1),

transforms.RandomPerspective(distortion_scale=0.2, p=0.5),

transforms.RandomAffine(degrees=0, translate=(0.1, 0.1), scale=(0.8, 1.2), shear=10),

transforms.ToTensor(),

])

test_transform = transforms.Compose([

transforms.Resize((224, 224)),

transforms.ToTensor(),

])

4. 데이터셋 분할 및 데이터 로더 생성

데이터셋을 학습, 검증, 테스트 세트로 나누고, 각각의 데이터 로더를 생성합니다.

from torch.utils.data import DataLoader, random_split

dataset = CustomDataset(image_paths=image_paths, labels=labels, transform=train_transform)

train_size = int(0.7 * len(dataset))

val_size = int(0.15 * len(dataset))

test_size = len(dataset) - train_size - val_size

print(f'Train size: {train_size}, Validation size: {val_size}, Test size: {test_size}')

train_dataset, val_dataset, test_dataset = random_split(dataset, [train_size, val_size, test_size])

val_dataset.dataset.transform = test_transform

test_dataset.dataset.transform = test_transform

train_loader = DataLoader(train_dataset, batch_size=4, shuffle=True)

val_loader = DataLoader(val_dataset, batch_size=4, shuffle=False)

test_loader = DataLoader(test_dataset, batch_size=4, shuffle=False)

5. 간단한 CNN 모델 정의

간단한 CNN 모델을 정의합니다. 2개의 컨볼루션 레이어와 2개의 FC 레이어로 구성됩니다.

import torch

import torch.nn as nn

import torch.optim as optim

import torch.nn.functional as F

class SimpleCNN(nn.Module):

def __init__(self):

super(SimpleCNN, self).__init__()

self.conv1 = nn.Conv2d(3, 16, kernel_size=3, stride=1, padding=1)

self.pool = nn.MaxPool2d(kernel_size=2, stride=2, padding=0)

self.conv2 = nn.Conv2d(16, 32, kernel_size=3, stride=1, padding=1)

self.fc1 = nn.Linear(32 * 56 * 56, 512)

self.dropout = nn.Dropout(0.5)

self.fc2 = nn.Linear(512, 2)

def forward(self, x):

x = self.pool(F.relu(self.conv1(x)))

x = self.pool(F.relu(self.conv2(x)))

x = x.view(-1, 32 * 56 * 56)

x = F.relu(self.fc1(x))

x = self.dropout(x)

x = self.fc2(x)

return x

model = SimpleCNN()

criterion = nn.CrossEntropyLoss()

optimizer = optim.Adam(model.parameters(), lr=0.001)

6. 모델 학습 및 검증

모델을 학습하고, 검증 세트에서의 성능을 평가합니다. 또한, Early Stopping을 적용해 과적합을 방지합니다.

train_losses = []

val_losses = []

val_accuracies = []

best_val_accuracy = 0

early_stop_patience = 5

early_stop_counter = 0

# training

num_epochs = 50

for epoch in range(num_epochs):

model.train()

running_loss = 0.0

for images, labels in train_loader:

optimizer.zero_grad()

outputs = model(images)

loss = criterion(outputs, labels)

loss.backward()

optimizer.step()

running_loss += loss.item()

epoch_loss = running_loss / len(train_loader)

train_losses.append(epoch_loss)

print(f'Epoch [{epoch+1}/{num_epochs}], Loss: {epoch_loss}')

# Validate

model.eval()

val_loss = 0.0

correct = 0

total = 0

with torch.no_grad():

for images, labels in val_loader:

outputs = model(images)

loss = criterion(outputs, labels)

val_loss += loss.item()

_, predicted = torch.max(outputs.data, 1)

total += labels.size(0)

correct += (predicted == labels).sum().item()

val_accuracy = 100 * correct / total

val_losses.append(val_loss / len(val_loader))

val_accuracies.append(val_accuracy)

print(f'Validation Loss: {val_loss/len(val_loader)}, Validation Accuracy: {val_accuracy}%')

# Early stopping

if val_accuracy > best_val_accuracy:

best_val_accuracy = val_accuracy

early_stop_counter = 0

torch.save(model.state_dict(), 'best_model.pth')

else:

early_stop_counter += 1

if early_stop_counter >= early_stop_patience:

print("Early stopping triggered.")

break

model.load_state_dict(torch.load('best_model.pth'))

7. 테스트 데이터 평가

최종 모델을 테스트 데이터에서 평가하고, 테스트 정확도를 계산합니다.

model.eval()

correct = 0

total = 0

all_images = []

all_labels = []

all_preds = []

with torch.no_grad():

for images, labels in test_loader:

outputs = model(images)

_, predicted = torch.max(outputs.data, 1)

total += labels.size(0)

correct += (predicted == labels).sum().item()

all_images.extend(images.cpu().numpy())

all_labels.extend(labels.cpu().numpy())

all_preds.extend(predicted.cpu().numpy())

test_accuracy = 100 * correct / total

print(f'Test Accuracy: {test_accuracy}%')

8. 학습 및 검증 손실, 정확도 시각화

학습 및 검증 손실, 검증 정확도를 그래프로 시각화합니다.

import matplotlib.pyplot as plt

plt.figure(figsize=(10, 5))

plt.plot(train_losses, label='Training Loss')

plt.plot(val_losses, label='Validation Loss')

plt.xlabel('Epoch')

plt.ylabel('Loss')

plt.legend()

plt.title('Training and Validation Loss')

plt.show()

plt.figure(figsize=(10, 5))

plt.plot(val_accuracies, label='Validation Accuracy')

plt.xlabel('Epoch')

plt.ylabel('Accuracy (%)')

plt.legend()

plt.title('Validation Accuracy')

plt.show()

9. 테스트 결과 시각화

테스트 데이터에서 예측된 결과를 시각화합니다.

import numpy as np

classes = ['lion', 'tiger']

fig, axes = plt.subplots(2, 5, figsize=(15, 6))

axes = axes.ravel()

for i in range(10):

image = all_images[i].transpose((1, 2, 0))

image = (image * 255).astype(np.uint8)

label = all_labels[i]

pred = all_preds[i]

axes[i].imshow(image)

axes[i].add_patch(plt.Rectangle((0, 0), image.shape[1], image.shape[0], fill=False, edgecolor='red', linewidth=2))

axes[i].text(5, 25, f'Pred: {classes[pred]}', bbox=dict(facecolor='white', alpha=0.75))

axes[i].text(5, 50, f'True: {classes[label]}', bbox=dict(facecolor='white', alpha=0.75))

axes[i].axis('off')

plt.tight_layout()

plt.show()

10. 결과

학습 및 검증 손실

검증 정확도

테스트 결과

이상으로 사자와 호랑이 이미지를 분류하는 CNN 모델을 구축하는 전체 과정을 마쳤습니다. 이번 포스트가 이미지 분류 모델을 구축하는 데 도움이 되길 바랍니다. 추가적인 질문이 있으시면 언제든지 댓글로 남겨주세요!

'인공지능(AI) 📚' 카테고리의 다른 글

| MediaPipe Holistic을 활용한 사용자 특정행동 수행 여부 감지 (0) | 2024.06.19 |

|---|---|

| MediaPipe Holistic을 활용한 실시간 웹캠 모션 인식 (0) | 2024.06.19 |

| MediaPipe Holistic을 활용한 모션 인식 및 JSON 파일 추출 (0) | 2024.06.19 |